How is the development of the entire AI industry in 2019?

1. The NLP model keeps refreshing the results, and Google and Facebook will sing your way out;

2. GAN is constantly evolving and can even generate high-resolution hard-to-recognize human faces;

3. Reinforcement learning breaks through strategic games such as StarCraft 2.

Analytics Vidhya released the 2019 AI Technology Review Report, which summarizes the progress made by AI in different technology fields in the past year, and looks forward to new trends in 2020.

Analytics Vidhya is a well-known data science community. Its technology review report was written by several machine learning industry experts.

The report believes that in the past year, the most rapid development is NLP, CV has been relatively mature, RL has just started, and next year may usher in a big explosion.

The qubits were compiled and supplemented based on the report. Not much to say, let's take a look at the AI technologies of 2019 one by one:

Natural language processing (NLP): blowout of language models, deployment tools emerging

Transformer rules NLP

The report believes that in the past year, the most rapid development is NLP, CV has been relatively mature, RL has just started, and next year may usher in a big explosion.

The qubits were compiled and supplemented based on the report. Not much to say, let's take a look at the AI technologies of 2019 one by one:

NLP has made a huge leap in 2019, and the breakthroughs made in this field this year are unparalleled.

The report believes that 2018 is a watershed moment for NLP, and 2019 is essentially a further development on this basis, allowing the field to leap forward.

Since the publication of the 2017 paper Attention is All You Need , a NLP model represented by BERT has appeared . Since then, Transformer has dominated the SOTA results again and again in the NLP field.

Google's Transformer-XL is another Transformer- based model that outperforms BERT in language modeling. Followed by OpenAI's GPT-2 model, it is known for its generation of human-like language.

In the second half of 2019, many innovations appeared in BERT itself, such as CMU's XLNet, Facebook AI's ROBERTa and mBERT (multilingual BERT). These models continue to refresh their scores on RACE, SQuAD and other test lists.

GPT-2 finally released the full version, open source 1.5 billion parameter models.

GPT-2 model address:

Further reading

Imitating Trump ’s tone is difficult to tell , Cornell blindly measured 1.5 billion parameter models: never so realistic, the strongest storytelling AI is completely here

Large pre-trained language models become the norm

Transfer learning is another trend emerging in the NLP field in 2019. We are starting to see multilingual models that are pre-trained on large unlabeled text corpora, enabling them to learn the potential nuances of the language itself.

GPT-2, Transformer-XL and other models can fine-tune almost all NLP tasks, and can run well with relatively little data.

Models like Baidu's ERNIE 2.0 introduced the concept of continuous pre-training, and the pre-training method has made great progress. In this framework, you can introduce different custom tasks step by step at any time.

New test standards launched

As a series of new NLP models have brought huge performance improvements, their test scores have reached the upper limit, and the difference is small, even exceeding the human average in the GLUE test.

Therefore, these test benchmarks are no longer sufficient to reflect the development level of the NLP model and are not conducive to further improvement in the future.

DeepMind, New York University, and the University of Washington, in conjunction with Facebook, have proposed a new test standard, SuperGLUE, which adds a more difficult cause-and-effect reasoning task, and poses new challenges to the NLP model.

Start thinking about NLP engineering and deployment

A large number of practical NLP resources emerged in 2019:

Stanford University's open source StanfordNLP library and HuggingFace 's Transformer pre-trained model library. spaCy uses this library to create spacy-transformers, an industrial-grade library for text processing.

The Stanford NLP team said: "Like the large language models we trained in 2019, we will also focus on optimizing these models."

The problem with large models like BERT, Transformer-XL, and GPT-2 is that they are computationally intensive, so using them in reality is almost impractical.

HuggingFace's DistilBERT shows that it can reduce the size of the BERT model by 40%, while retaining its 97% language comprehension ability, and 60% faster.

Google and Toyota have developed another method to reduce the size of the BERT model, ALBERT , which has obtained SOTA results on three NLP benchmark tests (GLUE, SQuAD, RACE).

Further reading

GitHub Wanxing NLP resources upgrade: Pytorch and TF deep interoperability, integration of 32 latest pre-trained models

Increased interest in speech recognition

The NLP field renewed interest in developing audio data for frameworks such as Nvidia NeMo in 2019 , which makes model training of end-to-end automated speech recognition systems extremely easy.

In addition to NeMo, NVIDIA also open sourced QuartzNet , another new end-to-end speech recognition model architecture based on Jasper. Jasper is a small, efficient speech recognition model.

More focus on multilingual models

How can NLP really work before multilingual data can be used?

This year, there has been new interest in re-exploring multilingual approaches to NLP libraries (such as StanfordNLP) with pre-trained models that can process text in more than 50 human languages. As you can imagine, this has had a huge impact on the community.

Then, successfully tried to create a large language model like BERT through projects such as Facebook AI's XLM mBERT (over 100 languages) and CamemBERT, which was fine-tuned for French:

2020 trends

The above is a summary of the progress in the NLP field in 2019. What are the trends in this field in 2020?

Sudalai Rajkumar, an NLP expert and Kaggle Grandmaster, speculated on the main trends for 2020:

Continuing the current trend and training larger deep learning models on larger data sets;Build more production applications, and smaller NLP models will help with this;The cost of manually annotating text data is high, so semi-supervised tagging methods may become important;The interpretability of the NLP model, knowing what the model learns when making fair decisions.

According to Sebastian Ruder, an NLP scholar and one of the authors of ULMFiT:

Not only will you learn from huge data sets, you will also see more models learning efficiently on fewer samples;Models increasingly emphasize sparsity and efficiency;Focus on more data sets in multiple languages.

Computer vision (CV): image segmentation becomes more sophisticated, and AI fraud becomes more and more real

In terms of computer vision, the number of papers accepted by international conferences such as CVPR and ICCV has increased significantly this year. Below, let's review some of the most noticeable important algorithms and implementations in 2019.

Kaiming He Mask R-CNN is being surpassed

Mask Scoring R-CNN

In the COCO image instance segmentation task, Mask Scoring R-CNN surpassed He Kaiming's Mask R-CNN, and therefore chose the oral report of Computer Vision Summit CVPR 2019.

In models such as Mask R-CNN, the confidence of instance classification is used as a measure of the quality of the mask, but in fact there is no strong correlation between the quality of the mask and the quality of the classification.

This article from Huazhong University of Science and Technology has studied this issue, and they proposed a new scoring method: mask score.

Not just relying on detection to get classification scores, the Mask Scoring R-CNN model also learns a scoring rule for masks separately: MaskloU head.

Considering the classification score and mask score at the same time, Mask Scoring R-CNN can evaluate the quality of the algorithm more fairly and improve the performance of the instance segmentation model.

The research team conducted experiments on the COCO dataset. The results show that Mask Scoring R-CNN has an AP improvement of about 1.5% on different backbone networks.

This paper was named one of the top ten papers in the first quarter of 2019 by Open Data Science.

The first work of the thesis is Huang Zhaojin, an intern from Horizon, from the team of Wang Xinggang, an associate professor of the School of Telecommunications of Huazhong University of Science and Technology. Wang Xinggang is also one of the authors of this paper.

SOLO

The new instance segmentation method SOLO proposed by Byte Beat Intern Wang Xinlong, as a single-stage instance segmentation method, has a simpler framework, but the performance also exceeds Mask R-CNN.

The core idea of the SOLO method is to redefine the instance segmentation problem into a class-aware prediction problem and an instance-aware mask generation problem.

The experimental results on the COCO dataset show that the effect of SOLO generally exceeds the previous single-stage instance segmentation mainstream method, and in some indicators it also exceeds the enhanced Mask R-CNN.

Related address

Further reading

Performance surpasses He Kaiming Mask R-CNN! A new method for open source image segmentation for Huake master students | CVPR19 Oral

EfficientNet

EfficientNet is a model scaling method developed by Google based on AutoML. It achieved 84.1% accuracy in ImageNet testing and refreshed records.

Although the accuracy rate is only 0.1% higher than the previous SOTA model Gpipe, the model is smaller and faster, the amount of parameters and FLOPs are greatly reduced, and the efficiency is improved by 10 times .

Its authors are Mingxing Tan, an engineer from Google Brain, and Quoc V. Le, the chief scientist.

Related address

GitHub:

paper:

Further reading

Google's open source scaling model EfficientNets: ImageNet achieves record accuracy and improves efficiency 10 times

Detectron2

This super PyTorch target detection library comes from Facebook.

Compared to the original Detectron, it trains faster, has more features than ever, and supports more models than ever. Once on the GitHub Hot List.

In fact, Detectron2 is a complete rewrite of the original Detectron: the first generation was implemented in Caffe2, and in order to iterate model design and experiments faster, Detectron2 was written from scratch in PyTorch.

In addition, Detectron2 is modular, and users can add their own customized modules to any part of a target detection system.

This means that many new studies can be written in hundreds of lines of code, and the new implementation can be completely separated from the core Detectron2 library.

Detectron2 adds new models such as Cascade R-NN, Panoptic FPN, and TensorMask on the basis of all available models of a generation (Faster R-CNN, Mask R-CNN, RetinaNet, DensePose).

Related address

GitHub:

Further reading

# 1 in GitHub Trends: Super PyTorch target detection library Detectron2, faster training and support for more tasks

Stronger GANs

In 2019, GANs are still active.

For example, the second generation of VQ-VAE from Google DeepMind

, The generated image is more high-definition and realistic than BigGAN, and more diverse:

BigBiGAN

, Not only can generate high-quality images, but also refreshed records on image classification tasks:

SinGAN, jointly produced by Israel Institute of Technology and Google, won the best paper of ICCV2019

:

Nvidia's StyleGAN also evolved into StyleGAN2 with high energy

To make up for the various defects of the first generation:

Further reading

The best GAN ever! Generating large-scale high-definition pictures of human face animals is difficult to discern, DeepMind releases second-generation VQ-VAE

Refreshing ImageNet records, GAN will not only fake! DeepMind uses it for image classification, spike classifier AI

Without StyleGAN2, I thought the first generation would be the pinnacle: Nvidia's face generator evolved with high energy to make up for major flaws

2020 trends

Looking ahead to 2020, Analytics Vidhya believes that the focus of the visual field will still focus on GANs:

New methods such as styleGAN2 are generating increasingly realistic facial images, and detecting DeepFake will become increasingly important. Both vision and (audio) will do more research in this direction.

Meta-learning and semi-supervised learning are another major research direction in 2020.

Reinforcement learning (RL): Interstellar DOTA is both broken, with greater usability

In 2019, the existing enhancement methods have been extended to larger computing resources and made some progress.

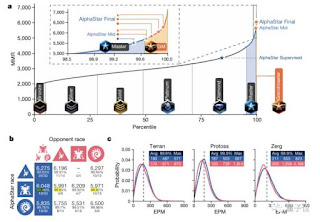

In the past year, reinforcement learning has solved a series of complex environmental problems that were difficult to solve in the past, such as defeating top human professional players in games such as Dota2 and Starcraft 2.

The report states that, although these advances have attracted great attention from the media industry, there are still some problems with the current approach:

A large amount of training data is required, and training data can only be obtained if there is a sufficiently accurate and fast simulation environment. This is the case for many video games, but this is not the case for most real-world problems.Because of adopting this training mode, large-scale reinforcement learning algorithms feel like they are just over-intensive strategies in the problem space, rather than letting it learn the potential causality in the environment and summarize them intelligently.Similarly, almost all existing Deep RL methods are very fragile in terms of adversarial samples, out-of-domain generalization, and single-sample learning, and there is currently no good solution.

Therefore, the main challenge of Deep RL is to gradually shift from dealing with a deterministic environment to focusing on more basic advancements, such as generalization, transfer learning, and learning from limited data. We can see this from the research trends of some institutions.

First, OpenAI released a new environment similar to the gym, which uses process level generation to test the generalization ability of the Deep RL algorithm.

Many researchers are beginning to question and re-evaluate our actual definition of "smart". We started to better understand the undiscovered weaknesses of neural networks and use this knowledge to build better models.

Further reading

Lost 1-10, crashed in 5 minutes! Starcraft 2 professional master was defeated by AI for the first time in history, AlphaStar became famous in World War I

Crushing 99.8% of human opponents, all three races are Grand Master! Interstellar AI boarded in Nature, the first full disclosure of technology

2: 0! Dota2 world champion OG was crushed by OpenAI, and humans only pushed out two outer towers during the whole process

How is Dota2 champion OG crushed by AI? OpenAI's three-year cumulative full paper is finally released

2020 trends

All in all, the forecast trends for 2020 are as follows:

Learning and promotion from limited data will be the central theme of reinforcement learning research;Breakthroughs in the field of reinforcement learning are closely related to progress in the field of deep learning;More and more studies will use the power of generative models to enhance various training processes.

Cross-cutting research: in-depth multidisciplinary research on AI

With the development of artificial intelligence technology, interdisciplinary research has also become a hot topic this year. The figure of AI is frequently found in medical, brain-computer interface, and even mathematical research.

Brain-computer interface

In the field of brain-computer interfaces that Musk and Facebook have bet on, deep learning is helping researchers decode what the brain thinks.

For example, the UC San Francisco-based Nature research uses deep learning to directly read the brain and convert brain signals into speech.

The previous speech-synthesis brain-computer interface can only generate 8 words per minute, and the new device in this study can generate 150 words per minute, which is close to human natural language speed.

medicine

In the medical field, machine learning technology does not only play a role in medical image recognition.

For example, a study by the German Institute of Tissue Engineering and Regenerative Medicine used the deep learning algorithm DeepMACT to automatically detect and analyze cancer metastases in the entire mouse body .

Based on this technology, for the first time, scientists have observed tiny metastatic sites formed by individual cancer cells, and have increased the work efficiency by more than 300 times .

"Currently, the success rate of oncology clinical trials is about 5%. We believe that DeepMACT technology can greatly improve the drug development process for preclinical research. Therefore, this may help find stronger drug candidates for clinical trials and hopefully help save Many lives, "said study author Ali Ertürk.

mathematics

Although mathematics is the foundation of natural science, it has also played a role of "feedback" under the continuous development of AI.

A new model published by Facebook can accurately solve differential equations and indefinite integrals in 1 second.

Not only that, the performance also surpasses commonly used Mathematica and Matlab.

Integral equations and differential equations can both be regarded as transforming one expression into another. Researchers believe that this is a special case of machine translation and can be solved by NLP.

The method is mainly divided into four steps:

- Represent mathematical expressions in the form of a tree;

- Introduced the seq2seq model;

- Generate a random expression;

- Calculate the number of expressions.

Researchers evaluated the accuracy of the model in solving calculus equations in a data set of 5,000 equations.

The results show that for differential equations, beam search decoding can greatly improve the accuracy of the model.

On a test set of 500 equations, the best performing commercial software is Mathematica.

When the new method performs a beam search with a size of 50, the model accuracy improves from 81.2% to 97%, which is far better than Mathematica (77.2%).

And, for some problems that Mathematica and Matlab cannot solve, the new model gives effective solutions.

Looking forward to machine learning in 2020

From NLP to computer vision to reinforcement learning, there are many things to look forward to in 2020. Here are some of the key trends predicted by Analytics Vidhya for 2020:

By 2020, the number of machine learning jobs will continue to grow exponentially. In large part due to the development of NLP, many companies will seek to expand their teams, and this is a good time to enter the field.The role of the data engineer will be even more important.AutoML took off in 2018, but did not reach the expected height in 2019. Next year, as off-the-shelf solutions for AWS and Google Cloud become more prominent, we should pay more attention to this.Will 2020 be the year we finally see a breakthrough in reinforcement learning? It has been in a doldrums for several years, as moving research solutions to the real world has proven to be a major obstacle.

0 Comments